Superintelligence or not, we are stuck with thinking

Complex cognitive tasks would not disappear in a world with "artificial superintelligence" (whatever that means)

In November 2025, Anthropic released Opus 4.5, the second-to-last iteration (Opus 4.6 already exists) of their artificial intelligence models focused on programming. Since then, I haven’t stopped reading programmers on social media saying more or less the same thing: this model is scary.

After trying it recently for the first time, I agree. Since the initial release of ChatGPT (3) in 2022, I hadn’t experienced that sensation of awe and anxiety, seriously questioning whether my profession will exist in three years (although this shock factor is probably exaggerated by how extremely good these models are at building prototypes and proofs of concept, and not so much in the long run in real production environments).

As a result of this experience, creating an application in a few hours by vaguely writing a few instructions, I’ve been thinking about the implications that AI and its possible future development may have for human cognitive tasks: basically, can a world exist in which humans don’t have to “think”? Where every cognitively difficult task can be delegated to an AI?

This question, for me, is quite important. Perhaps this is partly because, as with others, my identity has been largely built around thinking and solving complex problems. Having studied a difficult degree, having a good salary for performing complicated work, learning and being able to understand “intellectual” subjects grants a certain status in our society. That this part of the human experience could cease to be useful feels almost like a personal affront. An identity crisis, we might also call it.

But setting aside these parochial concerns, I believe this matter can transcend the selfish interests of the professional or intellectual classes. The name we give our species is homo sapiens, that is, the hominin that knows. What we think differentiates us from the rest of life on this planet seems to be precisely that, “that we know.” If knowing ceased to be something productive useful, and became a sport like weightlifting or sprinting, perhaps we should consider changing how we refer to ourselves.

Obviously, human beings can never stop being “intelligent.” No matter how much AI there is, we won’t start seeing the world as a donkey does (to pick an animal considered, unfairly, not very bright). But the question I’m asking is whether humans will need to undertake complex tasks not for leisure, nor because it’s part of our identity and purpose, but out of pure productive, existential necessity: maintaining and improving our technology, economy, and society. Perhaps this distinction seems irrelevant to some, but I consider that this absence of “urgency” and importance in understanding the world would pose a qualitatively different scenario for our species.

I repeat that, surely, the root of this concern is largely personal. To give an example, I haven’t devoted more than two minutes of thought to the impact AI has or may have on art. However, I know many artists are treating it as an existential threat. Obviously, we all look out for our own when it comes to what we consider important or not.

But setting aside philosophical reflections about why something is important or not (Camus already taught us that nothing, and everything, is), let’s return to the original dilemma: is it possible for a world to exist in which humans don’t need, productively speaking, to perform complex cognitive tasks?

If we consider the best (or worst) case scenario, and assume that AI is capable in the short term of directly replacing certain professions, the usual process of creative destruction from new technologies suggests that new professions would be created at a higher level of abstraction. Just as the internet wiped out entire professional sectors, but created others in the process.

In this case, as long as AI remains below human capabilities, we would still need to perform productive cognitive tasks, precisely at the levels of abstraction that AI cannot reach. In this world, we could argue that even greater cognitive effort would be needed to manage the growing complexity of a world filled with “sub-intelligences.”

However, AI seems to have the potential to be something qualitatively different from other new technologies.

Current models (LLMs) probably have too many limitations to become a genuinely general intelligence, but let’s imagine that, eventually, some other new paradigm ends up creating an intelligence comparable to the human one.

If this were the case, and nobody knows whether it might happen in five or a hundred years, or not at all, every higher level of abstraction would be automatically automated (pardon the redundancy). Excel automated certain accounting tasks, but made it possible to create just as many more. In contrast, a general AI would automate current tasks and, iteratively, all the new ones it would create in the process, since, by definition, it could handle any technical task a human could perform.

I will ignore here, perhaps unjustifiably, the matter of resources and energy efficiency, since although an AI may in principle automate a human task, it might do so consuming more resources and in a less efficient manner. In this case, there would still be room for human beings for purely economic reasons. This is no trivial matter, as the struggle for survival has spent millions of years turning us into extremely efficient machines. But let’s ignore this issue here, and pretend that these AIs are as efficient as us or that there are unlimited resources to power them.

Evidently, an AI exactly identical to the human mind could not exist, unless it were itself human. Therefore, by “similar” we can understand that it is slightly better at some tasks and slightly worse at others. In this case, there would be room for human cognition in those tasks we continue to dominate.

For the sake of argument, let’s then imagine an AI superior to the human mind in all productive tasks requiring complex cognition.

Here we need to take a small detour to examine the matter of this superintelligence, since the hypothesis of the possibility of a superintelligence, which I’m going to use here, does not seem at all obvious to me.

Its possible existence is something that is often taken for granted, as if intelligence were a one-dimensional property like height or weight, and therefore intelligences superior to the human one could naturally exist. This intuition comes largely from the false assumption that animals are, in the abstract, less intelligent than humans, ignoring that certain animals are undoubtedly better than us at some mental tasks (spatial memory, navigation…), and that what makes us special are certain cognitive abilities unique to us. This is very different from an abstract and one-dimensional measure of “intelligence.”

So perhaps, due to physical, computational, or ontological limitations, what differentiates the human brain from those of other animals is a particular emergent property of minds: abstract thought, logic, consciousness, or whatever it may be. Perhaps human abstract thought is a qualitative leap that cannot be “incremented.” We could even speculate, quite presumptuously, that human abstract thought is the highest possible exponent of “intelligence” in this universe.

Although, really, a human being today could be considered “superintelligent” compared to a homo sapiens born before the Neolithic revolution, even though they genetically possess the same innate capabilities. Human knowledge has been a collective and cumulative process of the human “hive mind,” in which the whole is greater than its parts. AIs would probably be integral parts of this whole, not its surpassing.

The hypothesis of the “singularity“ is also very popular, in which a superintelligence would improve itself iteratively and exponentially toward infinity, producing a kind of hyperintelligent singularity. I find this hypothesis not very credible for the same reasons we’ve already seen. Furthermore, what makes us think that an intelligence can automatically produce a superior one? Human beings haven’t achieved this yet and it remains to be seen whether they will. And if they do, it won’t be because of their innate intelligence, as we’ve said, but because of a process of knowledge accumulation that has lasted thousands of years.

But to continue the exploration, let’s set these questions aside. Let’s take it as given that a superintelligence can exist, and that humans can create it.

In this world, would it make sense for humans to participate in complex cognitive tasks? Or could we delegate all intellectual thought to this super AI and enjoy a beer on a terrace while simply directing the swarm of super-servants?

“AI, build a bridge.” “AI, organize a trip to Mars.” Etc.

Here there are several issues. The first is: would it be possible, in principle, to direct an AI that is by definition more intelligent than us? If the answer is no, the dilemma is already solved: since we can never delegate every cognitive task (because a superintelligence is not directable by us), we have no choice but to settle for directable AIs, that is, cognitively inferior ones. That, and making sure this newly independent superintelligence doesn’t declare war on us (in this science fiction scenario we would certainly need to have preserved productive cognitive capabilities at risk of losing the war in the first minute).

But really, I don’t believe that this vision of an out-of-control superintelligence is something inevitable. When we imagine an entity more intelligent than us, we tend to attribute human qualities to it that, in my view, are not justified. This seems intuitively correct to us because we only know one “intelligent” species, namely the human one, and therefore we assume that every intelligent species will be similar to ours.

In what case would a superintelligence escape our control? In the case where it had its own motivations and ends: perhaps a desire to dominate, or to be free, or simply to pursue objectives different from those of humans. But these qualities have been instilled in human beings after millions of years of natural selection, of constant struggle to survive. That is why we want to eat, drink, have sex, have power and status, protect our family and friends, make sense of the world around us, etc.

Evidently, all these basic qualities are mediated by culture and are enormously fluid (each human generation changes objectives as technology, society, and culture evolve; the image humans have of “a perfect world” constantly evolves), but the fact that we have ends in themselves and a strong motivation to achieve them is largely explained by our belonging to the animal kingdom.

An intelligence without ends could not exist, since it would have no motivation to think, to make sense of the world. Something without ends is something static, dead. Therefore, a superintelligence would of course have goals , but like today’s AIs, they would be ends directly or indirectly defined by human beings (more or less successfully), and not the ends that motivate living beings, including ourselves.

But to be fair, although intelligences that are not the product of natural selection don’t necessarily need to want to survive and dominate their environment, the truth is that we cannot assure with certainty either that they wouldn’t develop their own ends, which perhaps are indeed an irreducible quality of all general intelligence. Since we know no examples other than human beings, we cannot definitively commit to one possibility or the other.

Putting ourselves in the case where this superintelligence did develop its own objectives, alien to those of humans, whether through “misunderstanding” or rebellion, human cognitive capabilities would then take on enormous importance, whether to redirect a society accustomed to delegating much of its work to this AI, or in the worst case, to defend against a hypothetically hostile superintelligence.

Examining the opposite case, where in principle it is possible for humanity to successfully align a superintelligence with its ends, would it then be possible to have that world in which human beings delegate all their complex thinking regarding productive tasks to an AI?

This alignment is precisely the crux of the matter. Could a human being who has delegated all productive cognitive tasks align a superintelligence with their objectives? How does this alignment occur? One could argue that only one generation of “expert” humans needs to produce this superintelligence perfectly aligned with human ends, and subsequent generations could relax and reap the rewards.

But would alignment work like this? A button you press once and that’s it? Obviously not. Alignment with human ends would be a fluid and changing product, since the ends and desires of humanity are in constant flux with its evolution.

Moreover, AI’s own participation in directing these ends would change the state of humanity, and with it its ends, and with it its alignment, and so on, which makes alignment an iterative and non-deterministic process. Therefore, not even a nearly omniscient superintelligence could “guess” the result of its continuous interaction with humanity.

Even in the fantastical world where humanity’s ends didn’t change with generations, it would be far-fetched to think these could be presented and programmed into an AI in a perfect and finished form, given that humanity wants contradictory things, upon getting what it wants realizes it didn’t actually want it, or simply doesn’t know what it wants.

And furthermore, until now, to simplify the argument, I’ve been referring to humanity as a whole, when it’s obvious that this is a fantasy. Humanity is divided by ideological, national, economic, political, cultural, religious, familial, personal and other interests. There exist the interests of eight billion humans (and not even a single individual’s interests are coherent) that amalgamate, ally, clash, and constantly evolve.

The alignment of a superintelligence would therefore be something eminently political. Based on whose interests, or which group’s?

In short, the alignment of a superintelligence would be a constant and iterative process because:

Humanity has no way of expressing or programming its objectives in a definitive manner, as these are contradictory, ambiguous, and ultimately indefinable

Even if humanity had this capacity “in a static state,” these objectives are fluid and continuously change with technological, social, and cultural shifts, including the constant effect that the actions of the aligned superintelligence itself would have, making the attempt to “guess” these objectives a non-deterministic task

Even if these objectives didn’t change, there are not common goals for all of humanity, but rather countless particular interests of groups, classes, nations, individuals, etc.

Therefore, humanity (or the human groups with the power to carry out this task) should always have the capacity to realign the AI if they don’t want to be subordinated by past generations or other human groups of their same generation.

The question is, then, whether this capacity could be maintained without having to perform complex cognitive tasks. The answer, in my understanding, is no.

Alignment would be a task that, irreducibly, would originate from human beings (if we want to maintain our own agency and ensure it is our objectives that are being pursued). This alignment would be based on profound ethical and political reflections (what world do we want? what suffering is avoidable? what risks should we take?), which would stem from changing human morality. This morality would be, I repeat, something irreducibly human, since unfortunately (or fortunately) there are no stone tablets with commandments that prescribe a definitive and finished morality for the universe.

Rather, morality and normativity are ultimately something arbitrary, a purely human quality that we don’t observe in the rest of nature, which demonstrates absolute indifference toward moral questions (does the universe care whether humanity, or the rest of life, is happy, suffers, or goes extinct?). And I’m not referring here to morality as meaning “being good,” but as the quality of wanting certain ends, whether for yourself, your family, or all of humanity, whether selfish, solidarity-minded, cruel, or altruistic.

The definition of these ends as a prior task for aligning a superintelligence would in itself be a complex cognitive task, and even more so if we consider that “ought” cannot be separated from “is.” That is to say, to define where we’re going, humanity would need to know where it stands, explain itself to itself, what its role in the universe is, attempt to explain the most elementary mysteries of existence, etc. Just as every human religion has its ethical and normative face, this is always based on a particular ontology, a particular interpretation of reality.

This constant search for the “truth” of the universe and our place in it would therefore be an integral part of this task of aligning a superintelligence, and therefore complex cognitive tasks would be necessary not only in the realm of normativity and ethics, but also in that of ontology: physics, mathematics, philosophy, etc. (we can assume that a humanity that has reached the point of developing a superintelligence would continue to have a primarily scientific and materialistic worldview).

Added to this and returning to politics, this alignment would be a constant power struggle, so human groups that did not participate in complex cognitive tasks would find themselves at a disadvantage compared to those that did, since they would have serious difficulties asserting themselves in the political sphere.

In addition to these irreducible reasons why humans could not escape complex cognitive tasks, there is another. Humanity, as an existential matter, would need to constantly verify the alignment of this superintelligence (as we said before, both due to possible misunderstandings and “rebellions”). The task of this verification, by definition, could not be delegated to the superintelligence itself, since then one would need to verify the verification itself (it would be like trying to find out if someone is lying by asking them if they’re lying), and so on.

One could argue here that perhaps this superintelligence could reprogram or redirect itself — isn’t it more competent than humans at all tasks? Humans would only need to happily communicate the newly decided alignment, and the AI would do the rest. But returning to the same argument, humans would need to already be sure that the prior state and alignment of this AI would produce the desired result when communicating the new ends to it; that is, they would need to understand how its internal mechanisms work upon receiving this communication. In short, we’re still dealing with the same problem but one step higher.

Humans would then need to understand enough of the internal and external workings of this superintelligence to know how to analyze, reprogram, or redirect it. Understanding, even in a heuristic manner, the mechanisms of an intelligence superior to ours would be an extremely complex task, one that would probably include several disciplines of human knowledge: cognitive science, computer science, in addition to those already mentioned: physics, mathematics, philosophy, etc. Probably, more technical disciplines like engineering would also be necessary to analyze what the superintelligence produces, since alignment would not be an abstract concept but a series of specific results.

And of course, if alignment is a continuous process, so too would be the change in this hypothetical superintelligence, meaning the process of understanding it, re-understanding it, and verifying it would never end.

In summary, there are three main reasons why the continuous alignment of a superintelligence would entail performing complex cognitive tasks:

Because alignment would require complex ethical and normative reflections, which would in turn need a compatible ontology, a deep knowledge of the universe and our place in it

Because this alignment would also be political, and the development and execution of strategies associated with these power struggles would remain part of the human condition

And finally, because it would be necessary to constantly verify the internal and external functioning of this superintelligence, at risk of this alignment being unsuccessful due to technical issues or existential threats

It is therefore obvious that, superintelligence or not, human beings will always need to perform complex cognitive tasks as a productive, existential matter: to maintain their independence, to be able to pursue whatever ends they set for themselves, and therefore, to be free.

These tasks, of course, could be performed with the assistance and collaboration of this hypothetical superintelligence, but humans would have to ultimately assume them in an external, autonomous, and independent manner.

I should clarify, again, that this scenario presented is largely speculative. In addition to everything said, I also don’t believe human intelligence would be something static, especially not in the scenario where an artificial superintelligence has been developed. I believe, instead, that human and artificial intelligence would end up feeding back into each other and would evolve together. But even so, I wanted to pose it to test whether the proposed dilemma would make sense: a humanity that no longer thinks and yet advances on the back of AI.

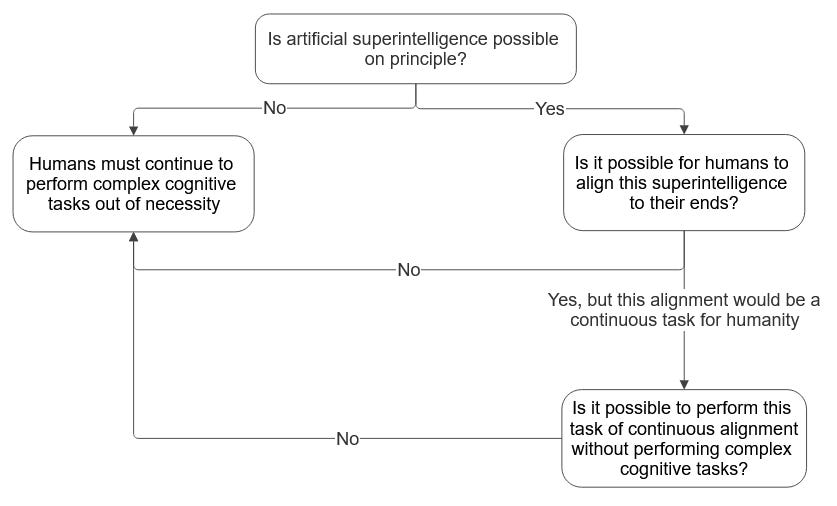

As an additional summary of the entire argumentative thread, here is this lovely (?) diagram:

And after this digression (perhaps absurd for some) to prove that human beings will never be free from thinking for themselves, can we draw any conclusion for our lives today? In my view, yes: that no matter how much or how little AI advances, we will never have any choice but to try to understand the world and push the frontiers of our knowledge (at both the individual level and as a species).

Otherwise, if we allow AI to atrophy our complex cognitive capabilities, we will end up subordinated by the whims of past generations, the outbursts of a misaligned and vengeful AI, or much more likely, by those humans who preserved these capabilities.